AWS API Template

Getting your application’s back end up and running on Amazon Web Services (AWS) is not a trivial exercise, especially if you want a robust and extensible result that will support a modern development process.

Here’s a plug-and-play AWS API template that offers the following features:

-

Produces a secure Amazon Web Services (AWS) API using the Serverless Framework.

-

Features both public and private endpoints.

-

Secured by an AWS Cognito User Pool supporting native username/password authentication and one federated identity provider (Google).

-

Configured to act as both the authentication provider and a secure remote API for my Next.js Template on the front end.

-

Efficient and highly configurable. You should be able to get it up and running on your own domain, in your own infrastructure, in just a few minutes with edits to nothing but environment variables.

-

Deployable from the command line to multiple AWS CloudFormation Stacks, each exposing an independent environment (e.g.

dev,test, andprod), with its own authentication provider, at configurable endpoints. -

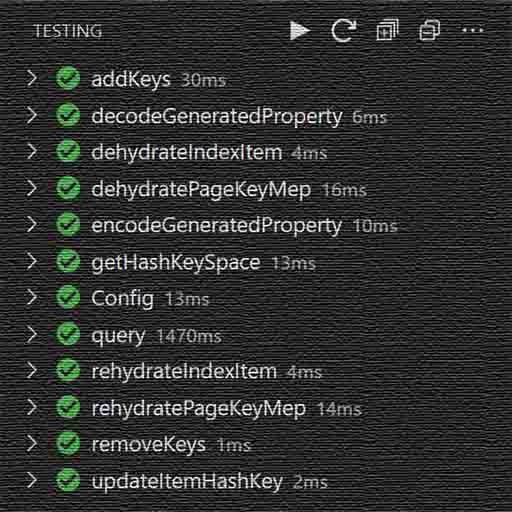

Unit testing support with

mochaandchai. Includes examples and a sweet testing console! -

Built-in backwards compatibility. Every major release triggers the deployment of an independent Stack on every environment. Share key resources across Stacks so you can bring your users with you across major versions.

-

Automatically build and deploy the relevant Stack following every code push with AWS CodePipeline. See Automated Deployment below for more info.

-

Code formatting at every save & paste with

prettier. -

One-button release to GitHub with

release-it.

This template’s repo branches are in fact hooked into CodePipeline to support deployments to demo environments. Consequently you will see many references to the karmanivero.us domain. Obviously, you’ll need to replace these with references to your own domain.

See it on GitHub! Clone the Repo!

The Tech Stack

This template represents a specific solution to a bunch of specific problems. It makes a number of highly opinionated choices.

Node.js & ES6

Choose your own poison. I like Javascript.

The AWS Lambda execution environment now supports Node v18, which of course features full native ES6 support. If you are accustomed to building for the lowest common denominator with Webpack & Babel, rejoice: it just isn’t necessary here!

This probably makes for a much simpler project structure than you are accustomed to.

The Serverless Framework

There are many different ways to script the creation of AWS resources.

The AWS CLI is the gold standard. It’s maximally powerful, but very low level. It’s probably smarter than I am.

AWS Amplify is new, super powerful, and supported by a great UI. It’s also very HIGH-level and doesn’t yet enjoy broad community support.

The Serverless Framework is a well-supported, open-source wrapper over the AWS CLI. It is opinionated with respect to best practices, offers a ton of plugins, and is accessible to the talented amateur, of which I am one.

There are other choices as well, but we’re using the Serverless Framework here because it seemed like a reasonably safe choice that offers plenty of room to grow.

Worth noting that I tried & rejected these three key Serverless Framework plugins as either inadequate to the task or too unstable to trust:

The necessary functionality is in the template; I just found better ways of getting there.

Environments, API Versions, Stages & Stacks

This is important! This document uses specific terms in specific ways, and it will help if we are all on the same page.

Some key definitions:

-

An Environment is a logical step in your development process. This template currently supports three environments:

dev,test, andprod. See Some Thoughts About DevOps below for ideas about how to use these effectively. -

An API Version is a distinct major version of your project. Major versions introduce breaking changes, so when you bump your major version, you want to keep your previous versions operating to support backward compatibility. This project defaults in API Version

v0; subsequent values would bev1,v2, and so on. -

Stage relates specifically to AWS API Gateway. API Gateway supports multiple “environments” and “versions” (see above) on a single API Gateway instance. We will deploy each environment version to its own API Gateway, so only one Stage each. This is normally a key term in Serverless deployments, but this template handles Stage construction for you, so I’ll try to use this term as little as possible in this document to avoid confusion. Examples of Stages are

aws-api-template-v0-dev,aws-api-template-v1-prod, and so on. -

When you deploy a specific API Version into a specific Environment, this template creates a completely independent CloudFormation stack. A Stack contains all of the resources associated with that deployment, which may be updated in future deployments to the same Stack. Examples of Stacks are

aws-api-template-v0-dev,aws-api-template-v1-prod, and so on. Stages and Stacks are PHYSICALLY very different, but LOGICALLY equivalent. To avoid confusion, in this document I will refer to Stacks rather than Stages wherever possible.

Project Configuration

This template deploys multiple Environments across multiple API Versions in a highly configurable fashion. Both the project as a whole and individual environments include secrets: configurations that need to exist in your dev environment to support local testing and manual deployment, but should NOT be pushed to your code repository.

The key AWS configuration file in the project is serverless.yml. Nominally this file would contain most of this stuff, but I thought it would be a good idea to abstract configuration data away from this highly structured file.

Everybody understands .env environment variable files, so that seemed to be a great way to go, which is nominally supported by the Serverless Dotenv Plugin. Unfortunately, I couldn’t get it to work.

So I eliminated this dependency and am relying instead on my own get-dotenv package, which adds some handy features to the very robust dotenv-cli tool, actually does everything the plugin only promises, and works like a charm.

Because of this, you will almost NEVER run sls directly! Instead, you will preface it with npx getdotenv and specify your .env file directories & target environment, like this:

npx getdotenv -p ./ ./env -e dev -- sls deploy --verbose

… except you wouldn’t do THAT either, because this command is now encapsulated in the package script npm run deploy.

More info on this in the relevant sections below. Later I will encapsulate this approach and some other useful features into a CLI wrapper for sls. (#10)

Release Management

This template supports automated release management with release-it.

Releases are numbered according to Semantic Versioning. Every release generates a release page at GitHub with release notes composed of your commit comments since the last release.

In the near future (#10), the template will pick up on your major version number to populate API Version, which is a factor driving Stack creation. See Environments, API Versions, Stages & Stacks above for more info.

Meanwhile, you’ll need to populate the API_VERSION environment variable manually in .env.

To create a new release, just run this command:

npm run release

Setting Up Your Dev Environment

Use the Visual Studio Code IDE in order to leverage relevant extensions. Not an absolute requirement but you’ll be happy if you do.

These instructions are written from the perspective of Windows OS. If you are a Mac or Linux user, you may need to make some adjustments.

They also assume you have local administrator permissions on your development machine.

Install Applications & Global Packages

This template is a Node.js project. Node.js is now in v19, but the latest AWS Lambda Node.js execution environment is v18. The current Node.js LTS version is v18.12.1, so we’ll use that one.

Rather than install Node.js directly, it is better to install it & manage versions from the the Node Version Manager (NVM). Follow these instructions to configure your system:

-

If you already have Node.js installed on your machine, uninstall it completely and remove all installation files. You can easily use NVM to reinstall & switch between other desired Node versions.

-

Follow these instructions to install NVM on your system. This is a Windows-specific implementation! Mac/Linux users, adjust accordingly.

-

Once NVM is installed on your system, open a terminal with System Admin Permissions and run the following commands:

# windows only Set-ExecutionPolicy -ExecutionPolicy Unrestricted -Scope CurrentUser -Force # all platforms nvm install 18.12.1 nvm use 18.12.1 npm install -g serverless -

Install Git for your operating system from this page.

-

Install Visual Studio Code from this page.

-

WINDOWS ONLY: There is an alias conflict between the

serverlesspackage and the PowerShellSelect-Stringcommand.To resolve it, open VS Code. In a terminal window, enter

code $profile. A file namedMicrosoft.PowerShell_profile.ps1will open in your code editor. Add the following line to this file, then save and close it:Remove-Item alias:slsIf you choose not to resolve this, than at any point in these instructions where I use

sls, you should useserverlessinstead. -

Restart your machine.

Create a new GitHub Repository

Click here to clone this template into your own GitHub account.

Do you already have a repository based on this template? No need to do this then.

Clone the Project Repository

Navigate your VS Code terminal to the directory you use for code repositories and run this command with appropriate replacements to clone this repo. You may be asked to log into GitHub.

git clone https://github.com/<my-github-account-or-organization-name>/<my-project-name>.git

Open the newly created local repository folder in VS Code.

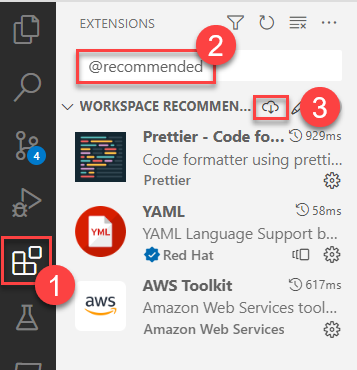

If this is your first time opening the folder, you should be asked to install recommended VS Code extensions. Install them.

If VS Code didn’t ask, follow these steps to install all workspace-recommended extensions:

-

Open the VS Code Extensions tab

-

Enter

@recommendedinto the search box -

Click the Download link.

Zero the package version and install dependencies by running these commands:

npm version 0.0.0

npm install

Set the version in package.json to 0.0.0.

Vulnerabilities

At the time of this writing, running npm install will generate the following

vulnerability warning:

6 vulnerabilities (3 high, 3 critical)

If you run npm audit, you will find that all of these vulnerabilities relate

to the following dev dependencies, all of which are to do with docs generation:

> npm list trim

# @karmaniverous/aws-api-template@0.2.1

# └─┬ concat-md@0.5.0

# └─┬ doctoc@1.4.0

# └─┬ @textlint/markdown-to-ast@6.0.9

# └─┬ remark-parse@5.0.0

# └── trim@0.0.1

> npm list underscore

# @karmaniverous/aws-api-template@0.2.1

# ├─┬ concat-md@0.5.0

# │ └─┬ doctoc@1.4.0

# │ └── underscore@1.8.3

# └─┬ jsdoc-to-markdown@8.0.0

# └─┬ jsdoc-api@8.0.0

# └─┬ jsdoc@4.0.0

# └── underscore@1.13.6

These vulnerable dependencies support the development environment only and will NOT be included in your production code!

Create Local Environment Variable Files

Look for these files in your project directory:

.env.local.templateenv/.env.dev.local.templateenv/.env.test.local.templateenv/.env.prod.local.template

Copy each of these files and remove the template extension from the copy.

Do not simply rename these files! Anybody who pulls your repo will need these templates to create the same files in his own local environment.

In the future, this will be accomplished with a single CLI command. (#10)

Connect to GitHub

This template supports automated release management with release-it.

If you use GitHub, create a Personal Access Token

and add it as the value of GITHUB_TOKEN in .env.local.

If you use GitLab, follow these instructions and place your token in the same file.

For other release control systems, consult the release-it README.

You can now publish a release to GitHub with this command:

npm run release

Connect to AWS

You will need to connect to AWS in order to interact with AWS resources during development.

Generate an Access Key Id and Secret Access Key for either…

- Your AWS Root account (not recommended)

- An IAM user with admin permissions (or at least enough to support development)

If you aren’t able to do this, ask your AWS administrator.

Add those two values in the appropriate spots in .env.local.

Test Your Setup

Enter the following command in a terminal window:

npm run offline

A local server should start with an endpoint at http://localhost:3000/v0-dev/hello. If you navigate to this endpoint in a browser, you should see a blob of JSON with the message Hello world!

Deploying to AWS

Every AWS deployment of this project either creates or updates a Stack.

Which Stack gets created or updated depends on two factors:

- The Environment chosen at deployment time.

- The API Version configured in

.env.

Each Stack contains all of the AWS resources associated with that deployment, and exposes a unique set of API & authentication endpoints.

The assumption is that these endpoints will map onto a custom domain, so there are some configurations to set and a little work to do in AWS before you can deploy a Stack to AWS.

Preparing AWS

Follow these steps to prepare your AWS account to receive your deployment. Where you are asked to set an environment variable in this section, set it at .env:

-

Choose a name for your service (e.g.

my-aws-api). This will be the root of every resource ID related to your application. It must begin with a letter; contain only ASCII letters, digits, and hyphens; and not end with a hyphen or contain two consecutive hyphens. Enter your service name for environment variableSERVICE_NAME. -

Choose a root domain (e.g.

mydomain.com) and host its DNS at AWS Route53. Enter your root domain for environment variableROOT_DOMAIN. -

Request & validate a certificate from the ACM Console that covers domains

<ROOT_DOMAIN>and*.<ROOT_DOMAIN>. Enter the certificate ARN for environment variableCERTIFICATE_ARN. -

Choose an API subdomain (e.g.

api). All of your APIs will be exposed at<API_SUBDOMAIN>.<ROOT_DOMAIN>. Double-check your Route 53 zone file to make sure it isn’t already assigned to some other service! Enter your API subdomain for environment variableAPI_SUBDOMAIN. -

Choose your API Version (e.g.

v0). Enter the appropriate value for environment variableAPI_VERSION.In the future, this value will be pulled directly from the package version at deploy time. (#10)

-

Choose an authorization subdomain token (e.g.

auth). Your authorization endpoints will be at<AUTH_SUBDOMAIN_TOKEN>-<API_VERSION>[-<non-prod environment>].<ROOT_DOMAIN>. Double-check your Route 53 zone file to make sure these aren’t already assigned to some other service! Enter your authorization subdomain token for environment variableAUTH_SUBDOMAIN_TOKEN. -

The template assumes your Cognito Authorization UI will be integrated with a web application at

ROOT_DOMAINor at subdomains representing either aprodor apreviewfront-end environment (e.g. my Next.js Template).Enter appropriate subdomains for environment variables

WEB_SUBDOMAIN_PRODandWEB_SUBDOMAIN_PREVIEW.For local testing, enter the web application’s

localhostport for environment variableWEB_LOCALHOST_PORT.If you’re using a different front-end application, you’ll have to edit

serverless.ymlaccordingly. You can leave this alone for now and the application will still deploy properly! -

Run the following command to create an API Gateway custom domain at

<API_SUBDOMAIN>.<ROOT_DOMAIN>.npx getdotenv -- sls create_domain

That’s it. You’re now all set to deploy to any Stage at AWS!

Manual Deployment

Run the following command to deploy a Stage to Environment <env> (e.g. dev, test, or prod) for the configured API Version:

npm run deploy -- -e <env token>]

If this is your first time, try deploying to the dev Environment. In this case, you can just run

npm run deploy

Deployments take a few minutes and are differential, so the first one to any Stack will take the longest.

Once your deployment succeeds, your console will display a list of available resources. For example:

endpoints:

GET - https://3akn0sj501.execute-api.us-east-1.amazonaws.com/dev/hello

GET - https://3akn0sj501.execute-api.us-east-1.amazonaws.com/dev/secure-hello

functions:

hello: aws-api-template-dev-hello (15 MB)

secure-hello: aws-api-template-dev-secure-hello (15 MB)

Serverless Domain Manager:

Domain Name: aws-api-template.karmanivero.us

Target Domain: d2jf3xe1z71uro.cloudfront.net

Hosted Zone Id: Z2FDTNDATAQYW2

The public base path of your API will depend on your settings, but will look like:

https://<API_SUBDOMAIN>.<ROOT_DOMAIN>/<API_VERSION>[-<non-prod environment>].<ROOT_DOMAIN>

To test your deployment, open your hello-world public endpoint in a browser, e.g. https://aws-api-template.karmanivero.us/v0-dev/hello. You should see the following content:

{

"message": "Hello world!"

}

Automated Deployment

An AWS CodePipeline watches a code repository branch (e.g. at GitHub). When it detects a change, it…

- Imports the code to an AWS S3 bucket.

- Launches an AWS CodeBuild project that…

- Allocates a virtual machine & imports the code repository.

- Loads all supporting applications (e.g. the Serverless Framework) and project dependencies.

- Deploys the Stage defined by

API_VERSIONand the configured Environment, exactly as you would do from your desktop! - Preserves the logs and deallocates the virtual machine.

Each CodePipeline is configured to deploy to the Environment corresponding to the branch it watches. For example, the CodePipeline watching the main branch may be configured to deploy to the prod Environment. On deployment, the CodePipeline will deploy to the Stack corresponding to its designated Environment and its current API Version. If that Stack does not yet exist, the deployment process will create it.

All related CodePipelines share the same CodeBuild project. Each CodePipeline is configured with environment variables that it injects into the CodeBuild project on deployment. These are the environment designator and environment secrets contained in each .env.<ENV>.local file, which are blocked by .gitignore and are never pushed to the code repository.

The CodeBuild process is driven by buildspec.yml.

The following sections describe how to set up your CodePipelines at AWS. If you are plugging into an ongoing project, these should already be in place.

Creating Your First CodePipeline

Your first pipeline in this project is significant because you will also need to create the associated CodeBuild project and maybe also associated service roles. Let’s tackle all of this in reverse order.

Create a CodeBuild Service Role

When you create your CodeBuild project, you have the option of allowing AWS to create & manage associated a service role for you. This can get complicated fast, because you have to trust that AWS will be smart enough to figure out what policies to assign to this role as your project requirements change. If it isn’t, your deployments will fail in ways that are hard to troubleshoot.

Since all of this is purely internal to your AWS account, I suggest you create AWS service role codebuild-service for the CodeBuild use case. Assign the AdministratorAccess policy.

Real AWS administrators are probably breaking out into hives right now, but you’ll thank me.

Create Your Pipeline

If you are building this from scratch, you are probably operating from your main branch, which you will want to associate with your prod Stage. Let’s proceed on that assumption.

Before you begin… Your pipeline will pull your repo and start a build as soon as it is created! Commit all of your local changes and push your commits to GitHub.

From your Pipelines Console, create a new pipeline.

Step 1: Choose Pipeline Settings

-

Give the pipeline a name that reflects both the service name and the target Environment:

<SERVICE_NAME>-<ENV> -

We will allow CodePipeline to create a service role for us, and then we will use it in all future pipelines. The arguments against this for CodeBuild don’t apply here because the CodePipeline role will always need the same access. Call the new role

codepipeline-service. -

Under Advanced Settings, choose all defaults.

Step 2: Add Source Stage

This is where CodePipeline gets its code. The instructions below assume you use GitHub.

-

Choose a provider. If you use GitHub, choose GitHub (Version 2). Otherwise, work it out.

-

Choose an existing GitHub connection or create a new one. Note that if your code is in an Organization repo, Connections are Organization-specific.

-

Choose your repository & branch. In this example we are using

main. -

Leave any other defaults & click Next.

Step 3: Add Build Stage

-

Choose AWS CodeBuild as your build provider.

-

Since this is our first pipeline for this project, we will need to create a CodeBuild project. Click the Create Project button. A popup will appear and take you to a build project creation dialogue. In this dialogue…

-

Choose a project name that will be consistent across the project:

<SERVICE_NAME> -

Use a Managed Image with the following settings:

- Operating System: Ubuntu

- Runtime: Standard

- Image: highest version, currently

aws/codebuild/standard:6.0

-

Use an existing service role and choose the

codebuild-servicerole we created earlier. -

At the bottom of the dialogue, click Continue to CodePipeline.

-

-

Back in the CodePipeline window, use the Add environment variable button to add the contents of

.env.<ENV>.local. These variable names & values are case-sensitive!Since this is your first time through, you probably haven’t populated any OAUTH client secrets (e.g.

GOOGLE_CLIENT_SECRET). If so, just add those variables as placeholders. Note that they may NOT be blank! If undefined, many of these variables must be populated with*. See the.env.<ENV>.localfile for specifics. -

Click Next.

Step 4: Add Deploy Stage

We’re using the Serverless Framework to deploy from buildspec.yml, so click the Skip deploy stage button & confirm.

Step 5: Review

Review your settings & click Create pipeline. The new pipeline will immediately display a UI, pull your repo, and commence a build.

Creating Your Next Pipeline

Once you have an operating CodePipeline, creating the next one is easy! Just follow these steps:

-

You can deploy to any Environment from any branch, but when you create a pipeline you have to choose a specific source branch tand target Environment. Make sure the Environment you choose has corresponsing

.env.<ENV>&.env.<ENV>.localfiles in your repository with environment variables correctly populated. -

Create the new branch (e.g.

devortest) and push it to your remote repository. -

Choose an existing pipeline from the same project on your Pipelines Console, click into it, and click the Clone pipeline button.

-

Choose a pipeline name that follows your established naming pattern:

<SERVICE_NAME>-<ENV> -

Choose an existing service role and pick the CodePipeline service role we created earlier (e.g.

codepipeline-service). -

Choose all other defaults and click the Clone button.

-

Once the pipeline is created, click the Edit button.

-

Edit the Source pipeline stage, edit the Source action, and change the Branch name to your selected repo branch. Click Done on the popup and the Source pipeline stage.

-

Edit the Build pipeline stage, edit the Build action, and change the environment variable values to match the

.env.<ENV>.localfile corresponding to the new pipeline’s target Environment. Click Done on the popup and the Build pipeline stage. -

Click the Save button to save the pipeline changes and then click the Release change button to run the pipeline in its new configuration. It will pull your code from the new branch and commence a build.

Creating a Maintenance Pipeline

The next deployment following any API Version upgrade will create a new Stack reflecting the new API Version. The old stack will still be running: it will simply no longer receive new deployments.

You may wish to continue maintenance development in the old major version to support existing users who have not yet migrated to the new version.

To accomplish this, follow these steps:

-

release-it creates a GitHub tag at each release. Execute the following commands to pull a

devbranch from that tag and then push it back to GitHub:git checkout <highest previous major version tag> -b v<previous major version>-dev # Ex: git checkout 0.1.2 -b v0-dev git pushIf the previous major version were

0.1.2, this will have created new branchv0-devat that tag. -

Follow the instructions in Creating Your Next Pipeline to clone a new CodePipeline and attach it to this branch. Any new deployments on this pipeline will deploy into the previous API Version’s existing

devStack. -

Repeat the previous steps for your other Environments (e.g.

testandprod).

You can continue to release new versions within this major version. Just don’t advance this branch to a new major version, or you will overwrite your existing next-version stacks!

TODO

Figure out how to prevent this from happening (#11).

Deleting a Stack

Some Stack resources have properties that can only be set at create time (e.g. Cognito User Pool AliasAttributes). If you wish to change such a property, and if you will not unduly impact current users, the easiest option by far is to delete the entire stack and recreate it with the next deployment.

As you change your Stack configuration during the development process, your Stack might also simply become unstable and stop accepting new deployments. Deleting the Stack is often an efficient way to recover from a configuration error and get things moving again.

Use with extreme caution! Stack deletion will also eliminate all user accounts in the related user pool!

To delete a Stack, follow these instructions:

-

Find your API subdomain at API Gateway Custom Domains. Delete all API mappings related to the Stack.

-

Find the Stack in the CloudFormation console and click through to the Stack detail.

-

Under the Resources tab, find any S3 Buckets and delete their contents.

-

Delete the Stack.

If you intend to redeploy this stack, you may need to wait about 20 min for the CNAME records of the deleted stack to flush from DNS. If you redeploy too soon, you may see an error indicaing a DNS conflict.

TODO

Automate this process (#10).

Some Thoughts About DevOps

It’s your project. Do what you want! But if you’re interested, here’s a rational way to go about this…

-

During the development process you’re probably working in some feature branch & trying run your project locally. The corresponding command in our case is

npm run offlineWhen this fails, you iterate until it doesn’t.

-

Local builds only take you so far, and there are a ton of AWS services that can only exist remotely. So your next step is to try a remote

devbuild usingnpm run deployIf you’ve set up your CodePipelines, the same thing will happen when you merge your feature branch with the

devbranch. -

This remote build will often fail catastrophically and require you to delete the

devstack & start over. Once it doesn’t—and assuming all your other tests pass—you can create a prerelease and deploy the stable build to yourtestStack for integration testing by merging yourdevbranch intotest. -

Once your integration tests pass, you can create a release and deploy the new feature into production by merging your

testbranch intomain. -

If your new release is a major release, create a maintenance pipeline to support maintenance & backward compatibility for the previous major release.

Authentication

User authentication is provided by AWS Cognito.

By default, the setup supports sign up & sign in via the Cognito hosted UI. When a user signs up with an email/password combination, AWS will send an email with a code to confirm the email.

The template supports the Google federated identity provider but it is disabled by default. See Add Google Authentication below for more info.

Backwards Compatibility

An API Version upgrade generates a new Stack that includes an independent Cognito User Pool on a unique set of endpoints. You may not want this! If there have been no breaking changes to your User Pool, you probably want to retain the user accounts created in the previous API Version.

To accomplish this, simply add the relevant Cognito User Pool ARN to environment variable COGNITO_USER_POOL_ARN in file .env.<ENV>.

Add Google Authentication

AWS Cognito supports a wide variety of federated identity providers. Google is the only one currently supported by this template.

To enable Google authentication, follow these steps:

-

Follow the Google-related instructions at this page to set up a Google OAUTH2 application. Use the following Stage-spacific settings:

- Authorized Javascript Origins:

<AUTH_SUBDOMAIN_TOKEN>-<API_VERSION>[-<non-prod ENV>].<ROOT_DOMAIN> - Authorized Redirect URIs:

<AUTH_SUBDOMAIN_TOKEN>-<API_VERSION>[-<non-prod ENV>].<ROOT_DOMAIN>/oauth2/idpresponse

- Authorized Javascript Origins:

-

Add the resulting Client ID to environment variable

GOOGLE_CLIENT_IDin file.env.<ENV> -

Add the resulting Client Secret to environment variable

GOOGLE_CLIENT_SECRETin file.env.<ENV>.local -

Add the same Client Secret to environment variable

GOOGLE_CLIENT_SECRETin the relevant CodePipeline configuration.

Add Support For Other Federated Identity Providers

If you configure new federated identity providers following the patterns in this template, then they will only be ENABLED when the relevant client ID, client secret, etc. are added to the project configuration.

This page contains instructions for setting up Cognito-compatible OAUTH2 apps at Facebook, Amazon, Google, and Apple.

It is also possible to set up custom OpenId Connect (OIDC) providers which presumably will account for Twitter etc. There is an AWS reference here.

Once you understand the requirements of your new identity provider, follow these steps:

-

Add the relevant new non-secret environment variables (e.g.

GOOGLE_CLIENT_ID) to all.env.`files. If no value is available, set the value to`\*` -

Add the relevant new secret environment variables (e.g.

GOOGLE_CLIENT_SECRET) to all.env.<ENV>.localfiles. If no value is available, set the value to*. -

Add the same secret environment variables (e.g.

GOOGLE_CLIENT_SECRET) to all.env.<ENV>.local.templatefiles. Set all values to*. -

Add the same secret environment variables (e.g.

GOOGLE_CLIENT_SECRET) to all CodePipelines. If no value is available, set the value to*. -

Add a new identity provider Condition in

serverless.yml. Its purpose is to test whether the necessary environment variables have been populated to support enabling the identity provider.The new condition should be similar to

CreateIdentityProviderGooglebelow and reference the new provider’s environment variables:resources: Conditions: CreateUserPool: !Equals ["${env:COGNITO_USER_POOL_ARN}", "*"] CreateIdentityProviderGoogle: !And - !Condition CreateUserPool - !Not - !Or - !Equals ["${env:GOOGLE_CLIENT_ID}", "*"] - !Equals ["${env:GOOGLE_CLIENT_SECRET}", "*"] -

Create a new User Pool Identity Provider in

serverless.yml. It should be similar to the one below but meet the requirements of the new provider:UserPoolIdentityProviderGoogle: Type: AWS::Cognito::UserPoolIdentityProvider Condition: CreateIdentityProviderGoogle Properties: UserPoolId: !Ref UserPool ProviderName: Google ProviderDetails: client_id: "${env:GOOGLE_CLIENT_ID}" client_secret: "${env:GOOGLE_CLIENT_SECRET}" authorize_scopes: "profile email openid" ProviderType: Google AttributeMapping: email: email -

Conditionally reference the new User Pool Identity Provider in

serverless.ymlunderUserClient.Properties.SupportedIdentityProviders. Reference the new Condition and the new User Pool Identity Provider, similar to how it is done with Google below:SupportedIdentityProviders: - COGNITO - !If - CreateIdentityProviderGoogle - Google - Ref: AWS::NoValue

Test your setup by attempting a remote deployment. If your deployment succeeds, you will your provider configured under Federated identity provider sign-in on the relevant User Pool’s Sign-in experience tab at your AWS Cognito Console.

Endpoints

| Endpoint | Pattern | dev example |

prod example |

|---|---|---|---|

| Base API Path | https://<API_SUBDOMAIN>.<ROOT_DOMAIN>/API_VERSION[-{non-prod ENV}] |

https://api.mydomain.com/v0-dev/hello |

https://api.mydomain.com/v0/hello |

| Authorized Javascript Origin | <AUTH_SUBDOMAIN_TOKEN>-<API_VERSION>[-<non-prod ENV>].<ROOT_DOMAIN> |

https://auth-v0-dev.mydomain.com |

https://auth-v0.mydomain.com |

| Authorized Redirect | <AUTH_SUBDOMAIN_TOKEN>-<API_VERSION>[-<non-prod ENV>].<ROOT_DOMAIN>/oauth2/idpresponse |

https://auth-v0-dev.mydomain.com/oauth2/idpresponse |

https://auth-v0.mydomain.com/oauth2/idpresponse |

Issues

Secure Certificate

Normally we would specify a certificate on karmanivero.us and *.karmanivero.us using the serverless-certificate-creator plugin. The required entry in serverless.yaml would look like

custom:

customCertificate:

certificateName: "karmanivero.us"

hostedZoneNames: "karmanivero.us."

subjectAlternativeNames:

- "*.karmanivero.us"

The alternative name is currently creating an issue with this. It looks like the plugin is trying to submit duplicate validation records to the zone file, resulting in an error. See this pull request for more info.

Meanwhile, let’s create & verify the certificate manually using the ACM Console and then reference the certificate by ARN in serverless.yaml.

serverless.yml Validation

There is a conditional function on private API endpoints that selects authentication either by the Cognito User Pool created on the stack or by the ARN passed in via the COGNITO_USER_POOL_ARN environment variable. It looks like this:

secure-hello:

handler: api/secure/hello.get

description: GET /secure-hello

events:

- http:

path: secure-hello

method: get

cors: true

authorizer:

name: UserPoolAuthorizer

type: COGNITO_USER_POOLS

# *** CONDITIONAL ***

arn: !If

- CreateUserPool

- !GetAtt UserPool.Arn

- ${env:COGNITO_USER_POOL_ARN}

# *******************

claims:

- email

This is perfectly valid and works just fine, but the Serverless Framework issues the following warning on deployment:

Warning: Invalid configuration encountered

at 'functions.secure-hello.events.0.http.authorizer.arn': unsupported object format

Learn more about configuration validation here: http://slss.io/configuration-validation

You can ignore this warning and track the issue here.

User Accounts

As of now, users can create multiple accounts with the same email, but different user names. (#12)

Federated identities (i.e. social logins) with the same email also generate new accounts. (#13)

Leave a comment